Fire and Smoke Detection

Algorithm Introduction

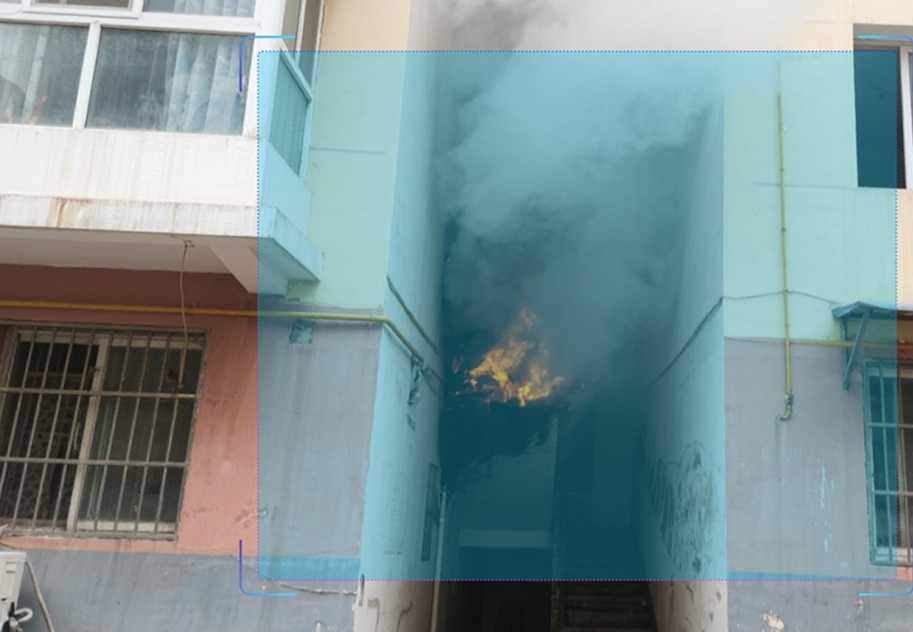

Utilizing AI vision analysis of video/image data captured by cameras to automatically detect and alert on fire incidents in real time. The system identifies two distinct fire types: dense smoke (opaque particulate matter generated by combustion) and open flames (visibly glowing deep red or light yellow combustion flames). Widely applicable in fire-prone environments.

- ● Brightness requirements: Minimum bright pixel ratio (grayscale value >40) of 50% in detection area

- ● Image requirements: Optimal detection performance at 1344×800 resolution

- ● Target size: For resolutions exceeding 1344×800, minimum target size of 40 pixels (width) × 66 pixels (height)

Application Value

-

Construction Sites

For fire-prone scenarios such as welding operations and material storage at construction sites, the smoke and fire detection algorithm automatically monitors crane zones and temporary warehouses. It identifies fires in real time, issues rapid alerts to reduce fire risks, and saves on manual patrol costs. -

Industrial Parks

The algorithm monitors high-risk areas, such as chemical raw material storage and equipment operation zones. It accurately detects smoke and fire, enabling efficient hazard mitigation to enhance safety management efficiency and ensure stable production operations. -

Warehouses/Workshops

To address fire hazards such as warehouse stockpiling and aging workshop electrical circuits, the algorithm intelligently monitors shelves and production lines, detecting smoke and fire and issuing early warnings. This reduces risks of cargo loss and personnel casualties, safeguarding asset security.

FAQ

-

Algorithm AccuracyAll algorithms published on the website claim accuracies above 90 %. However, real-world performance drops can occur for the following reasons:

(1) Poor imaging quality, such as

• Strong light, backlight, nighttime, rain, snow, or fog degrading image quality

• Low resolution, motion blur, lens contamination, compression artifacts, or sensor noise

• Targets being partially or fully occluded (common in object detection, tracking, and pose estimation)

(2) The website provides two broad classes of algorithms: general-purpose and long-tail (rare scenes, uncommon object categories, or insufficient training data). Long-tail algorithms typically exhibit weaker generalization.

(3) Accuracy is not guaranteed in boundary or extreme scenarios.

-

Deployment & InferenceWe offer multiple deployment formats—Models, Applets and SDKs.

Compatibility has been verified with more than ten domestic chip vendors, including Huawei Ascend, Iluvatar, and Denglin, ensuring full support for China-made CPUs, GPUs, and NPUs to meet high-grade IT innovation requirements.

For each hardware configuration, we select and deploy a high-accuracy model whose parameter count is optimally matched to the available compute power.

-

How to Customize an AlgorithmAll algorithms showcased on the website come with ready-to-use models and corresponding application examples. If you need further optimization or customization, choose one of the following paths:

(1) Standard Customization (highest accuracy, longer lead time)

Requirements discussion → collect valid data (≥1 000 images or ≥100 video clips from your scenario) → custom algorithm development & deployment → acceptance testing

(2) Rapid Implementation (Monolith:https://monolith.sensefoundry.cn/)

Monolith provides an intuitive, web-based interface that requires no deep AI expertise. In as little as 30 minutes you can upload data, leverage smart annotation, train, and deploy a high-performance vision model end-to-end—dramatically shortening the algorithm production cycle.